How to deploy software onto your hardware platform

Goal: put software on hardware

It's the oldest business model in Silicon Valley:

- Build custom hardware.

- Write software to run on that hardware.

- Get paid to use this combo to solve people's problems.

This three-step formula has generated trillions in market capitalization—Apple, Nvidia, and AWS are all good examples of this strategy at work. Hardware builds their moat and software pads their margin. It's simple!

Problem: it's hard though

Unfortunately, for robots and other embedded devices, no one has really perfected this strategy's technical underpinnings. While many device-specific, well-architected systems exist, the core challenge of how to install your software on your custom hardware remains canonically unsolved.

Between two worlds

This problem endures because these products exist at the intersection of two worlds. They're embedded devices, sure—robots usually run on batteries, move around their physical environment, and regularly experience shoddy network connectivity.

But modern robots often have much more compute capacity than your average smartwatch or refrigerator. In addition to their drivetrain and sensor arrays, these devices often have CPUs, GPUs, and disk capacity to rival a top-end server rack.

And of course, they have all the added management complexity that comes with these capabilities.

Since all new robots are custom hardware, every new robot has slightly different hardware specifications, and thus, software deployment mechanisms. For engineers building an embedded software deployment system, there is no singular, correct, generalized system design.

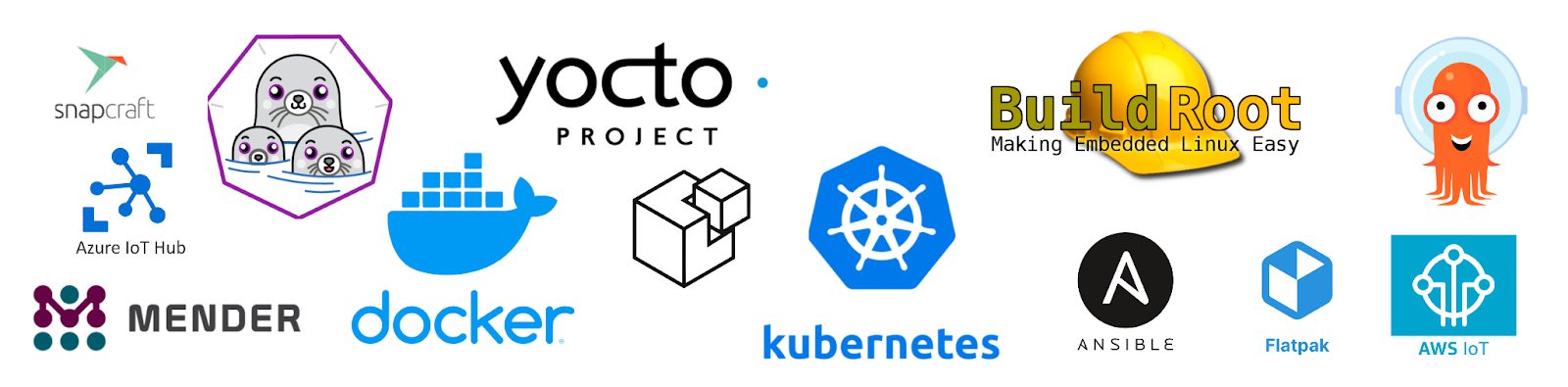

Instead, we're left to pick and choose from the long list of available tools from both the embedded and data center domains. And the list of potential options to choose from is quite long.

A preponderance of tools

Common technologies for packaging, deploying, and configuring software on hardware, in either or both the embedded and data center domains, include:

- Containers (Docker, Podman)

- Other packaging mechanisms (Snapcraft, Flatpak, AppImage)

- Container orchestrators (Kubernetes, Docker Swarm)

- Deployment agents (Argo CD, Gitlab CI/CD, Mender)

- Embedded Linux (Ubuntu Core, OpenWrt, BuildRoot, Yocto)

- Configuration management systems (Ansible, Salt, Puppet)

- IoT cloud frameworks (AWS, Azure, GCP)

- Virtualization mechanisms (VMWare, KVM)

... and more. This article is not a guide to which of these technologies is best—all of them are appropriate for certain applications some of the time.

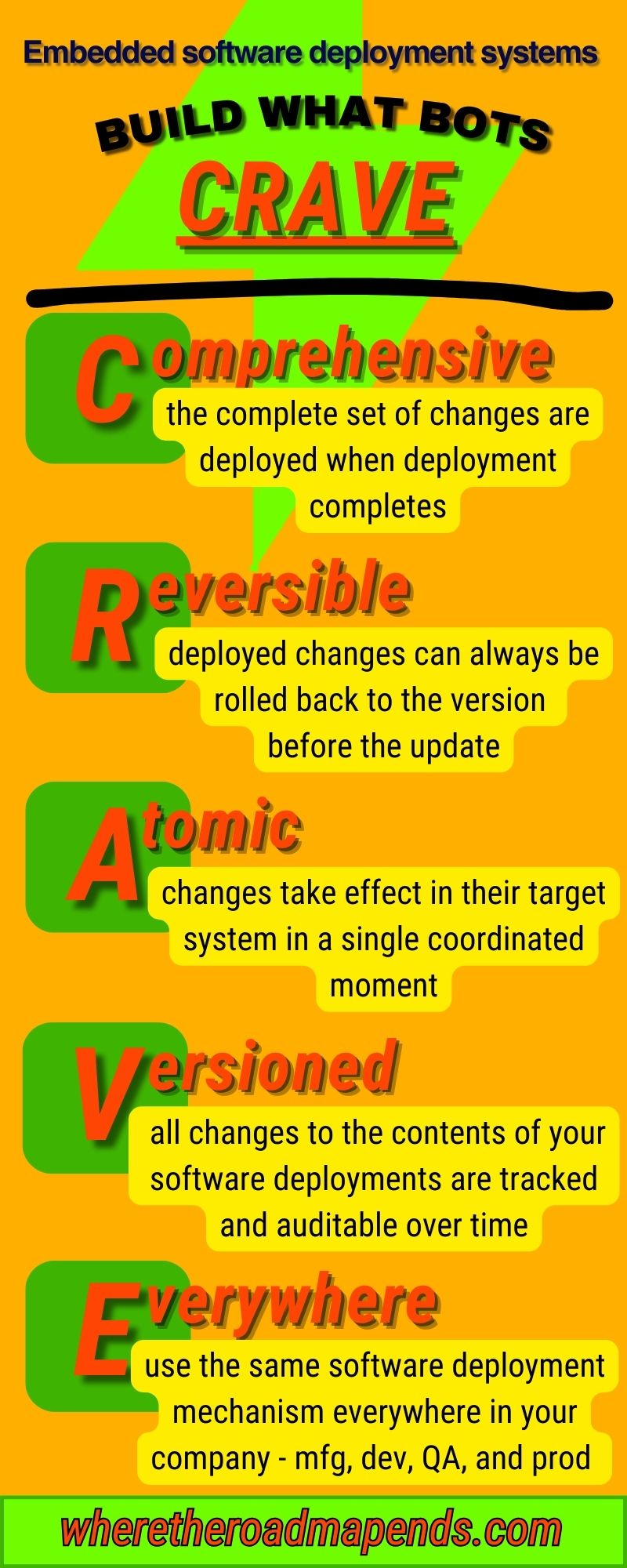

Instead, stay focused on a high quality design for your particular embedded software deployment system with one simple acronym: CRAVE. The five requirements are Comprehensive, Reversible, Atomic, Versioned, and Everywhere.

Regardless of product domain, hardware complexity, or company growth stage, designs that fulfill these five requirements will serve your business well.

What bots CRAVE

Comprehensive

Comprehensive software deployment systems ensure that the entire set of changes are deployed when a deployment completes. Depending on what you're deploying, comprehensive could refer to a single binary, an application with all of its dependencies, or an entire root file system.

This means that when a deployment finishes, the entire system has been updated to a comprehensively-known state. Only allow incremental patching if you can reliably and deterministically sum up those patches to a single, well-understood, deployed version of your software.

It's important to note that "comprehensive" does not necessarily mean that the entire deployment payload gets pushed onto your device every time. For example, many deployment systems use caching mechanisms to minimize network transfer. Caching is great—just make sure you're always cache-busting correctly when necessary.

Comprehensive deployments ensure that you've deployed everything you think you've deployed. If your deployment mechanisms don't include a way for you to do this, you'll eventually lose track of what's deployed on your devices!

Designing your system to perform comprehensive updates also helps deliver reproducible deployments. Without comprehensiveness, you'll have no way of knowing that you've deployed the same thing across your device fleet, or that a piece of software that works on one machine will work when deployed onto another.

Reversible

Reversible deployment mechanisms can always be rolled back to the version they were on before the update. Ideally, if a deployment fails for some reason, the software system will roll itself back without any human intervention.

It's ok if this is actually "roll forward", or "deploy again from onboard cache". The important design goal here is not to get stuck on a botched deployment, forcing yourself to commit to emergency engineering work if a bug is found after a release.

Reversibility is essential for minimizing the risk associated with software deployments. Without it, every update is a dangerous endeavor—if the update goes awry, you'll be stuck fixing forward, or worse, troubleshooting a bricked device.

Without reversibility, your engineering team will underperform. Because of the risks involved, a lack of reversibility will force your team to ship changes less frequently, iterate less freely, and build the product more slowly.

Atomic

Atomic deployment mechanisms ensure that your changes take effect in their target system in a single, well-coordinated moment. Effectively, for every software deployment from version A to version B, there should be a single, well-understood moment in time before which the system was running version A, and after which it was running version B.

Depending on the specific deployment mechanism in question, atomicity can take many forms. For base operating system deployments, this is often a system reboot. For Kubernetes-orchestrated workloads, it could be a new Pod replacing an old Pod behind a Service.

Regardless, atomic deployment mechanisms ensure that your individually-versioned units of software do not update incrementally. If you have one hundred virtual machines in an autoscaling group and you want to gradually transition from A to B across the cluster, that's fine—but at any given moment, you should know for certain whether any individual machine is running version A or B.

Atomicity lets you pinpoint which version of a piece of software to associate with behavioral changes. If you don't know exactly when your changes took effect, you can't reliably troubleshoot which changes in your software caused them.

Atomic deployments also help you avoid disruptive incremental changes in production that might introduce compatibility issues in the middle of a software release. If your software deployment process lacks atomicity, and you allow different components of your software to float between two versions while deployments occur, then must ensure that all combinations of those two versions across those components are compatible and function properly.

This can be very expensive—and it's easy to avoid that expense if you keep your deployment systems atomic.

Versioned

If you haven't realized it by now, your software deployments need to be versioned. This means that all changes to the contents of your deployment payloads need to be tracked and auditable over time.

At first glance, versioning is simple—just check your source code into git, right? In practice, however, this design requirement often gets overlooked. Are the binaries that your compiled executables link against also versioned? Have you pinned all of your package dependencies? Do you know where those dependencies come from?

Versioning is essential if you want to actually know what software you are running on your device after a deployment. It is a critical component of reproducibility, too—without versioning, you can't ever really know if a deployment on one device will work on another, because you have no reliable mechanism for telling whether the two devices are actually running the same software.

If a system component's version is floating or unspecified, a change to that version might break your system's functionality in a way that would be very difficult to root cause. Incorporating versioning into your deployment system design prevents this expensive mistake.

Everywhere

For software deployment systems, "everywhere" means "everywhere at your company".

This means that you should strive to use the same deployment mechanisms in your:

- Feature development processes.

- Quality assurance and testing procedures.

- Manufacturing line software installation.

- Production software over-the-air update systems.

In other words, don't invent different deployment systems for different parts of your company or workflow.

Abandoning this design requirement can be tempting, but you will regret it eventually. Over time, changes and fixes will make it into some deployment systems, but not others. And as the implementation details diverge, software that works properly in one part of your company will stop working in another.

This will cause chaos and mistrust, and slow down your feature delivery.

Once this deployment system divergence takes hold, it will naturally worsen over time. As separate individuals and teams run into deployment issues, they'll develop local, ad hoc solutions to solve the problems they run into. This leads to your engineering organization duplicating lots of automation work while delivering product updates more slowly and less reliably.

Build what bots CRAVE

The five requirements of the CRAVE framework are an ideal—it's probably impossible to build a system that fulfills them all completely. Software engineering is a game of tradeoffs—for every product, at every company, engineers should prioritize these criteria as time allows, weighing them against the needs of their employers.

Even if you can't build exactly the deployment systems your robots CRAVE, it's still worth it to get as close as possible to this ideal. The five design criteria outlined in this article can help steer your company away from the classic "works on my machine" quagmire, where intermittent, environment-specific bugs are never root-caused or fixed and software quality rots away over time.

Tell your friends! 👇